Research Works

The projects are ordered from newest to oldest. For easier navigation you might prefer to use the by-year and by-coauthor index below. Each project includes a technical Abstract, a high-level description of the background called Overview, and sometimes a jargon-free description Chillview -- simply click them to unfold.

→ By year:2023 2022 2021 2020 2019 2018 2017 2016 2015 2014 2013 2012 2011 2010 2009 2008

→ By coauthor:

Farhan Hashan (↓↓) Doug Hellinger (↓↓) Joseph N. Burchett (↓↓↓↓↓↓↓↓↓) David Abramov (↓↓) Cameron Hummels (↓) Angus G. Forbes (↓↓↓↓↓↓↓↓↓) J. Xavier Prochaska (↓↓↓↓↓↓) Hongwei (Henry) Zhou (↓) Pranav Anand (↓) Sunil Simha (↓) Jay S. Chittidi (↓) Nicolas Tejos (↓↓) Todd M. Tripp (↓) Rongmon Bordoloi (↓) Manu M. Thomas (↓) Jaroslav Krivanek RIP (↓↓↓↓↓↓) Sebastian Herholz (↓↓↓) Hendrik Lensch (↓↓↓) Yangyang Zhao (↓) Derek Nowrouzezahrai (↓) Jens Schindel (↓) Tomas Iser (↓) Alexander Wilkie (↓↓↓↓↓↓) Denis Sumin (↓↓) Ran Zhang (↓↓) Tim Weyrich (↓↓) Bernd Bickel (↓↓) Karol Myszkowski (↓↓) Jiri Vorba (↓) Tobias Ritschel (↓↓↓↓↓↓↓↓) Hans-Peter Seidel (↓↓↓↓↓↓↓↓) Pablo Bauszat (↓↓) Marcus Magnor (↓↓) Carsten Dachsbacher (↓↓) Petr Kmoch (↓↓↓)

→ Filaments of The Slime Mold Cosmic Web And How They Affect Galaxy Evolution

submitted to Astrophysical Journal Letters (2023)

Hasan F, Burchett JN, Hellinger D, Elek O, Nagai D, Faber SM, Primack JR, Koo DC, Mandelker N, Woo J

|

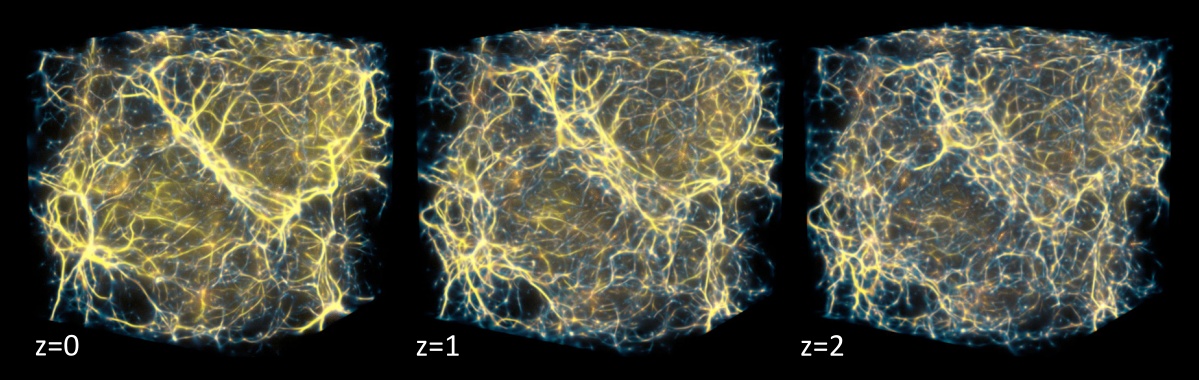

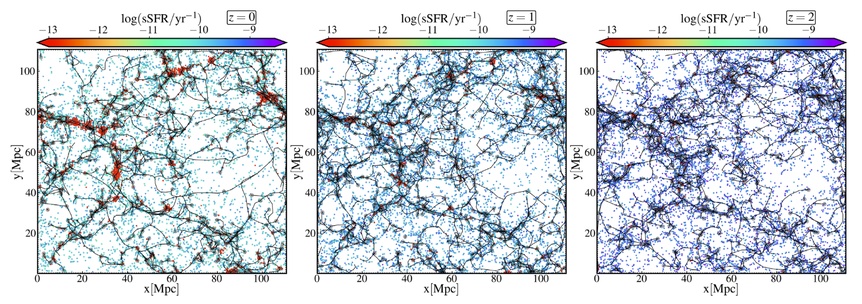

We present a novel method for identifying cosmic web filaments using the IllustrisTNG (TNG100) cosmological simulations and investigate the impact of filaments on galaxies. We compare the use of cosmic density field estimates from the Delaunay Tessellation Field Estimator (DTFE) and the Monte Carlo Physarum Machine (MCPM), which is inspired by the slime mold organism, in the DisPerSE structure identification framework. The MCPM-based reconstruction identifies filaments with higher fidelity, finding more low-prominence/diffuse filaments and better tracing the true underlying matter distribution than the DTFE-based reconstruction. Using our new filament catalogs, we find that most galaxies are located within 1.5-2.5 Mpc of a filamentary spine, with little change in the median specific star formation rate and the median galactic gas fraction with distance to the nearest filament. Instead, we introduce the filament line density, Sigma_fil(MCPM), as the total MCPM overdensity per unit length of a local filament segment, and find that this parameter is a superior predictor of galactic gas supply and quenching. Our results indicate that most galaxies are quenched and gas-poor near high-line density filaments at z<1. At z=0, quenching in log(M*/Msun)>10.5 galaxies is mainly driven by mass, while lower-mass galaxies are significantly affected by the filament line density. In high-line density filaments, satellites are strongly quenched, whereas centrals have reduced star formation, but not gas fraction, at z<0.5. We discuss the prospect of applying our new filament identification method to galaxy surveys with SDSS, DESI, Subaru PFS, etc. to elucidate the effect of large-scale structure on galaxy formation.

This is the second of a series of research articles investigating the dependence of galactic properties on the position of the galaxy within the cosmic web (i.e. the network of intergalactic medium). The properties of interest include stellar mass, star formation rate, color and others critical markers of galactic "identity". It is well known that the environment which each galaxy has evolved in has a significant impact on its lifecycle, and this research deepens that understanding, towards the ultimate bridging between galactic and extra-galactic physics. Instrumental in this effort is the MCPM methodology which provides a detailed reconstruction of the cosmic web density field and thus enables us to quantitatively characterize the relationships in question.

Crucially, this study compares two methods of obtaining an estimate for the intergalactic medium density field: the Delaunay Tessellation Field Estimator (DTFE) method which is well established but known to be incomplete, and the Monte Carlo Physarum Machine (MCPM) agent-based algorithm capable of reconstructing a complete density map of the cosmic web medium, including anisotropic structures and fainter filaments. In result we obtain a significantly more complete filamentary reconstruction using the topological extractor in DisPerSE. The study comtains results for different redshifts 'z', which correspond to different times in the universe's temporal evolution. Be sure to check the paper for beautiful detailed plots made by Farhan Hasan.

In this first of its kind study we use a biologically inspired algorithm to obtain a map of the gaseous medium around and between galaxies (known as the 'cosmic web'). This gives us a map with unprecedented detail, allowing us to study the relationship between each galaxy's position within the cosmic web and its resulting properties, such as its star formation rate and overall mass of the galaxy.

→ The Evolving Effect Of Cosmic Web Environment On Galaxy Quenching

Astrophysical Journal Letters (2023)

Hasan F, Burchett JN, Abeyta A, Hellinger D, Mandelker N, Primack JR, Faber SM, Koo DC, Elek O, Nagai D

|

![[PDF]](./meta/pdf.png) OA Article

OA Article

![[HTM]](./meta/htm.png) ArXiv Version

ArXiv Version

![[AVI]](./meta/avi.png) Conference Talk

Conference Talk

We investigate how cosmic web structures affect galaxy quenching in the IllustrisTNG (TNG100) cosmological simulations by reconstructing the cosmic web within each snapshot using the DisPerSe framework. We measure the comoving distance from each galaxy with stellar mass log(M*/Msun)>8 to the nearest node (d_node) and the nearest filament spine (d_fil) to study the dependence of both the median specific star formation rate (sSFR) and the median gas fraction (fgas) on these distances. We find that the (sSFR) of galaxies is only dependent on the cosmic web environment at z<2, with the dependence increasing with time. At z<0.5, 8<log(M*/Msun)<9 galaxies are quenched at d_node<1 Mpc, and have significantly suppressed star formation at d_fil<1 Mpc, trends driven mostly by satellite galaxies. At z<1, in contrast to the monotonic drop in (sSFR) of log(M*/Msun)<10 galaxies with decreasing d_node and d_fil, galaxies -- both centrals and satellites -- experience an upturn in (sSFR) at d_node<0.2 Mpc. Much of this cosmic web dependence of star formation activity can be explained by an evolution in (f_gas). Our results suggest that in the past approximately 10 Gyr, low-mass satellites are quenched by rapid gas stripping in dense environments near nodes and gradual gas starvation in intermediate-density environments near filaments. At earlier times, cosmic web structures efficiently channeled cold gas into most galaxies. State-of-the-art ongoing spectroscopic surveys such as the Sloan Digital Sky Survey and DESI, as well as those planned with the Subaru Prime Focus Spectrograph, JWST, and Roman, are required to test our predictions against observations.

This is the first of a series of research articles investigating the dependence of galactic properties on the position of the galaxy within the cosmic web (i.e. the network of intergalactic medium). The properties of interest include stellar mass, star formation rate, color and others critical markers of galactic "identity". It is well known that the environment which the galaxy evolved in has significant impact on its lifecycle, and this research deepens that understanding, towards the ultimate bridging between galactic and extra-galactic physics. Instrumental in this effort is the MCPM methodology which provides a detailed reconstruction of the cosmic web density field and thus enables us to quantitatively characterize the relationships in question.

→ SDSS DR17: The Cosmic Slime Value Added Catalog

ArXiv (astro-ph)

Wilde MC, Elek O, Burchett JN, Nagai D, Prochaska JX, Werk J, Tuttle S, Forbes AG

|

![[ICON]](./meta/htm.png) ArXiv Version

ArXiv Version

![[ICON]](./meta/htm.png) SDSSv17 Release Paper

SDSSv17 Release Paper

![[ICON]](./meta/htm.png) MCPM Cosmic Web VAC Data

MCPM Cosmic Web VAC Data

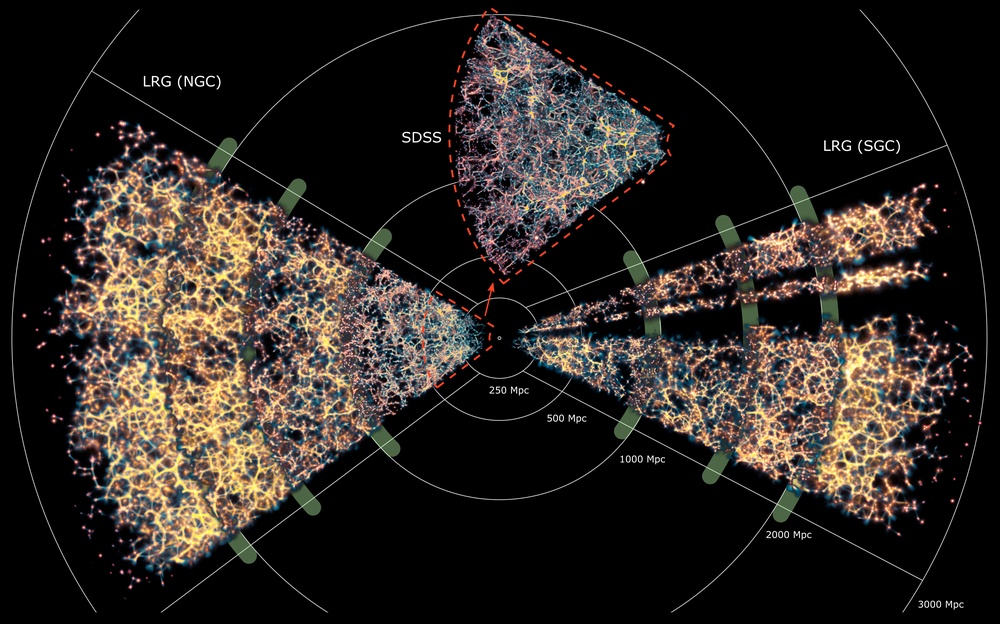

The "cosmic web", the filamentary large-scale structure in a cold dark matter Universe, is readily apparent via galaxy tracers in spectroscopic surveys. However, the underlying dark matter structure is as of yet unobservable and mapping the diffuse gas permeating it lies beyond practical observational capabilities. A recently developed technique, inspired by the growth and movement of Physarum polycephalum "slime mold", has been used to map the cosmic web of a low redshift sub-sample of the SDSS spectroscopic galaxy catalog. This model, the Monte Carlo Physarum Machine (MCPM) was shown to promisingly reconstruct the cosmic web. Here, we improve the formalism used in calibrating the MCPM to better recreate the Bolshoi-Planck cosmological simulation's density distributions and apply them to a significantly larger cosmological volume than previous works using the Sloan Digital Sky Survey (SDSS, z<0.1) and the Extended Baryon Oscillation Spectroscopic Survey (eBOSS) Luminous Red Galaxy (LRG, z<0.5) spectroscopic catalogs. We present the "Cosmic Slime Value Added Catalog" which provides estimates for the cosmic overdensity for the sample of galaxies probed spectroscopically by the above SDSS surveys. In addition, we provide the fully reconstructed 3D density cubes of these volumes. These data products were released as part of Sloan Digital Sky Survey Data Release 17 and are publicly available. We present the input catalogs and the methodology for constructing these data products. We also highlight exciting potential applications to galaxy evolution, cosmology, the intergalactic and circumgalactic medium, and transient phenomenon localization.

This article documents and supports the cosmic web catalog released as part of the 17th release of Sloan Digital Sky Survey dataset (SDSS v17). Our team has produced a so called "value added catalog" (VAC), which is a dataset accompanying the main release of the observational data in SDSS. Our specific VAC adds a reconstruction of the cosmic web overdensity field produced from the galaxies in the main SDSS v17 catalog.

The dataset is openly available and documented in the pilot publication of SDSS v17 as well as in much more detail in a dedicated paper describing the algorithmic methodology applied to produce the VAC. In summary, we apply the Monte Carlo Physarum Machine algorithm to produce the anisotopic density distribution of the cosmic web, a custom clustering-based method to removing the so-called "finger of god" artifacts from the observed galaxy distribution, and optimal-transport based mapping to produce the final overdensity estimates.

This is the first open source catalog of the cosmic web overdensity available at this scale and consistent across the entire observed region. The reconstruction includes nearly half a million galaxies from both the North and South Galactic Caps. The VAC contains two key modalities: the per-galaxy estimate of the IGM overdensity at its respective position, and the entire overdensity field (volume). These data are freely available and ready to be used in subsequent studies and cross-validated. Please contact us is you have any questions about using the data in your work. If you do, kindly cite this paper.

→ CosmoVis: An Interactive Visual Analysis Tool

for Exploring Hydrodynamic Cosmological Simulations

@ IEEE Transactions on Visualization and Computer Graphics (presented at IEEE VIS 2022)

David Abramov, Joseph N. Burchett, Oskar Elek, Cameron Hummels, J. Xavier Prochaska, Angus G. Forbes

|

![[ICON]](./meta/pdf.png) Article (preprint)

Article (preprint)

![[ICON]](./meta/htm.png) Live Version

Live Version

![[ICON]](./meta/htm.png) Code

Code

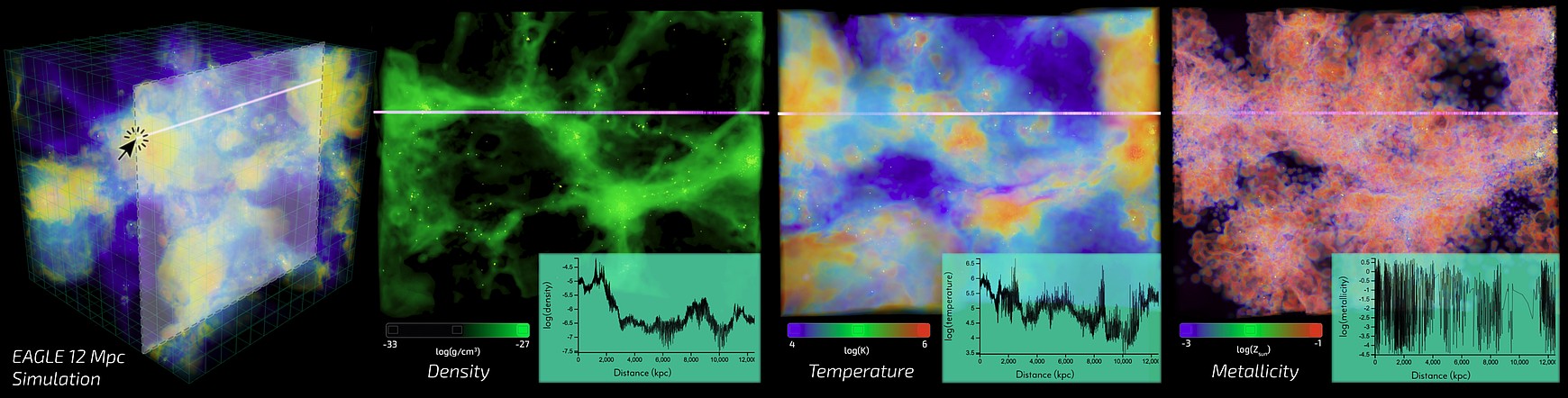

We introduce CosmoVis, an open source web-based visualization tool for the interactive analysis of massive hydrodynamic cosmological simulation data. CosmoVis was designed in close collaboration with astrophysicists to enable researchers and citizen scientists to share and explore these datasets, and to use them to investigate a range of scientific questions. CosmoVis visualizes many key gas, dark matter, and stellar attributes extracted from the source simulations, which typically consist of complex data structures multiple terabytes in size, often requiring extensive data wrangling. CosmoVis introduces a range of features to facilitate real-time analysis of these simulations, including the use of virtual skewers, simulated analogues of absorption line spectroscopy that act as spectral probes piercing the volume of gaseous cosmic medium. We explain how such synthetic spectra can be used to gain insight into the source datasets and to make functional comparisons with observational data. Furthermore, we identify the main analysis tasks that CosmoVis enables and present implementation details of the software interface and the client-server architecture. We conclude by providing details of three contemporary scientific use cases that were conducted by domain experts using the software and by documenting expert feedback from astrophysicists at different career levels.

Rigorous physics simulations are a key theoretical tool in many fields of science, including astrophisics, engineering, and biology. Typically performed with the help of supercomputers, such simulations result in massive datasets – often in the order of TB – and contain domain-specific information that cannot be interpreted without expert knowledge.

In this project we present a tool called CosmoVis designed for interactive analysis of datasets produced by massive-scale cosmological simulations (such as EAGLE and IllustrisTNG). These simulations are performed in order to understand the formation of principal structures in the Universe, namely galaxies and the distribution of the intergalactic medium that constitutes the Cosmic web.

CosmoVis is unique in its ease of deployment: it is a web-based tool accessed directly through the browser, providing an interactive visualization of these datasets. Several datasets of interest are pre-loaded and new ones can be added. CosmoVis provides a direct volume visualization of several different modalities associated with the intergalactic medium, as well as a labeled particle visualization of the galactic ecosystems. On top of that, CosmoVis allows experts to analyze the data using manually placed 'skewers': linear measurements of spectral absorption properties of the intergalactic medium. This is illustrated in the figure above.

With CosmoVis, astrophysicists can better understand the distribution of the intergalactic medium and the relationship between its properties and the galactic ecosystems within it.

→ Monte Carlo Physarum Machine: Characteristics of Pattern

Formation in Continuous Stochastic Transport Networks

@ Artificial Life journal (Winter 2022)

Oskar Elek, Joseph N. Burchett, J. Xavier Prochaska, Angus G. Forbes

|

![[HTM]](./meta/htm.png) OA Article

OA Article

![[htm]](./meta/htm.png) ArXiv Version

ArXiv Version

![[ppt]](./meta/ppt.png) ALIFE Slides

ALIFE Slides

![[htm]](./meta/htm.png) MCPM Code

MCPM Code

![[htm]](./meta/htm.png) Pictorial

Pictorial

![[bib]](./meta/bib.png) Citation

Citation

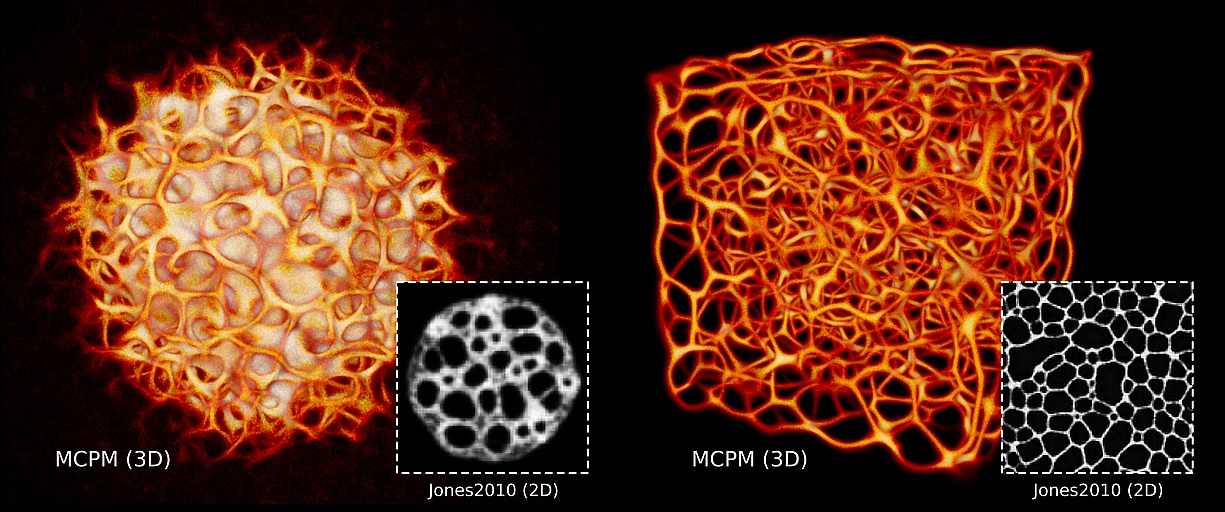

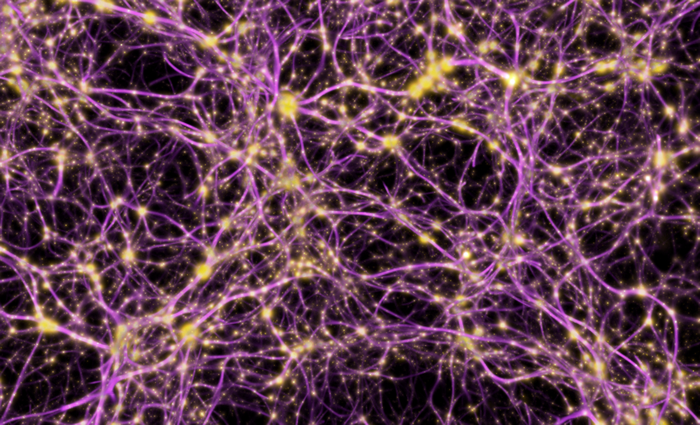

We present Monte Carlo Physarum Machine: a computational model suitable for reconstructing continuous transport networks from sparse 2D and 3D data. MCPM is a probabilistic generalization of Jones’s 2010 agent-based model for simulating the growth of Physarum polycephalum slime mold. We compare MCPM to Jones’s work on theoretical grounds, and describe a task-specific variant designed for reconstructing the large-scale distribution of gas and dark matter in the Universe known as the Cosmic Web. To analyze the new model, we first explore MCPM’s self-patterning behavior, showing a wide range of continuous network-like morphologies -- called “polyphorms” -- that the model produces from geometrically intuitive parameters. Applying MCPM to both simulated and observational cosmological datasets, we then evaluate its ability to produce consistent 3D density maps of the Cosmic Web. Finally, we examine other possible tasks where MCPM could be useful, along with several examples of fitting to domain-specific data as proofs of concept.

This article is a culmination of two years of work on the Monte Carlo Physarum Machine (MCPM) model. Our team has previously applied MCPM for reconstructing and visualizing large cosmological structures known as the Cosmic Web. We have also demonstrated that similar network-like structures can be found in language data when processed by neural networks into the so-called word embeddings.

The purpose of this article is to give full account of the MCPM model and its components. We also provide a detailed comparison to its predecessor, a 2010 model by Jeff Jones. Much like Jones's model, we believe MCPM can be used for a variety of data-scientific tasks -- the article discusses several examples.

On top of scientific applications, the forms generated by MCPM are visually quite evocative. Beside the Physarum itself, the patterns it self-synthesizes resemble mycelial and rhizomatic networks, organic scaffoldings such as bone marrow, and even networks of neurons. We envision modeling the appearance of such structures for educational and other creative uses, including ones in the physical form with the help of 3D printing.

Imagine a thing in a complete unity with itself. What would that be like? A harmonious object, without contradictions. Smooth and soft, no ridges or grooves. No structure. Purity with no character.

Imagine a thing with infinite variability. Unlimited shapes and colors, unlimited infividuality. Categories in categories, special islands of all different sizes. A disconnected chaos.

Now imagine a thing that's both of the above. At the same time, possesing structure but internally fully interconnected.

→ Robust and Practical Measurement of Volume Transport Parameters

in Solid Photo-polymer Materials for 3D Printing

@ OSA Optics Express 2021

Oskar Elek, Ran Zhang, Denis Sumin, Karol Myszkowski, Bernd Bickel, Alexander Wilkie, Jaroslav Krivanek, and Tim Weyrich

|

![[htm]](./meta/htm.png) Project Page

Project Page

![[htm]](./meta/htm.png) Paper (OpEx)

Paper (OpEx)

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

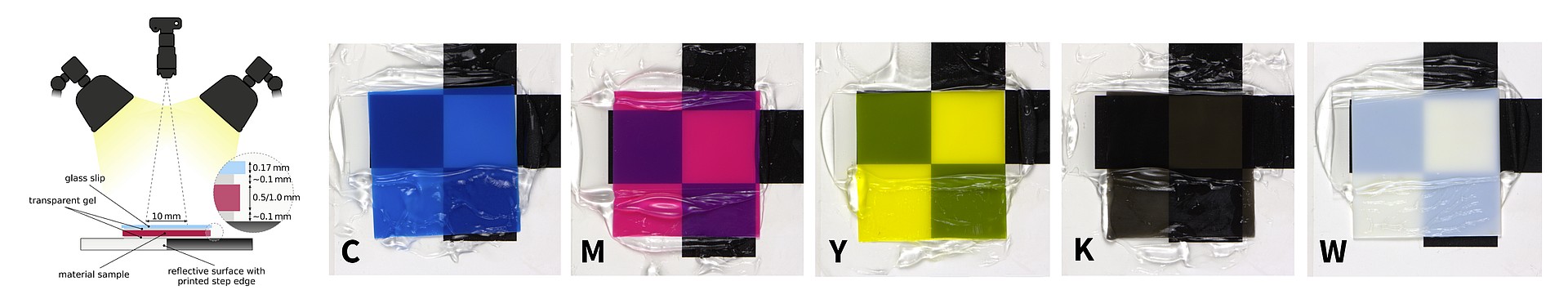

Volumetric light transport is a pervasive physical phenomenon, and therefore its accurate simulation is important for a broad array of disciplines. While suitable mathematical models for computing the transport are now available, obtaining the necessary material parameters needed to drive such simulations is a challenging task: direct measurements of these parameters from material samples are seldom possible. Building on the inverse scattering paradigm, we present a novel measurement approach which indirectly infers the transport parameters from extrinsic observations of multiple-scattered radiance. The novelty of the proposed approach lies in replacing structured illumination with a structured reflector bonded to the sample, and a robust fitting procedure that largely compensates for potential systematic errors in the calibration of the setup. We show the feasibility of our approach by validating simulations of complex 3D compositions of the measured materials against physical prints, using photo-polymer resins. As presented in this paper, our technique yields colorspace data suitable for accurate appearance reproduction in the area of 3D printing. Beyond that, and without fundamental changes to the basic measurement methodology, it could equally well be used to obtain spectral measurements that are useful for other application areas.

This project was born out of the need to optically characterize polymer materials used in state of the art color 3D printing.

Standard color calibration methods usually involve printing a calibration chart with different combinations of the printing materials or pigments. Though conceptually simple, this approach suffers from dimensionality explosion: if 10 concentrations of each material are necessary for the characterization, then 1000 calibration patches need to be printed for 3 materials, and 100000 for 5 materials (which is what our printer Stratasys J750 operates with). Moreover, this method cannot accurately predict colors in translucent materials, since it doesn't capture the cross-talk between patches of different colors.

It therefore became clear that we need to obtain the actual optical parameters of the print materials. Characterizing the materials this way allows us to actually simulate the volumetric light transport in the prints, even before they are physically manufactured. What's more, having the ability to virtually predict the print's appearance enables additional optimization of the material distribution, which significantly increases the resulting color and texture fidelity.

Our aim was to create a calibration methodology which is accurate, which robustly scales to new yet-unseen materials, and doesn't require expensive optical equipment. The last point is important, as we wanted to create a setup that can be implemented in a small design or art studio, without requiring extensive knowledge of optics. The resulting design, detailed in the above paper, fulfils all these requirements:

- it is accurate enough to yield simulated predictions that are visually indistinguishable from real prints,

- it scales to any new materials that can be printed or cast as a thin slice (i.e., virtually all materials used in 3D printing), and

- it consists of simple off-the-shelf components that together can be bought under $1000.

Beyond our initial work in 2017, the measured optical parameters have been used in subsequent works by Denis Sumin and Tobias Rittig to improve upon the original optimization methodology with faster prediction (using deep learning instead of Monte Carlo simulation) and application to arbitrary 3D geometries. These works represent the state of the art in color 3D printing as of 2021, and constitute important steps towards a full 3D object reproduction pipeline.

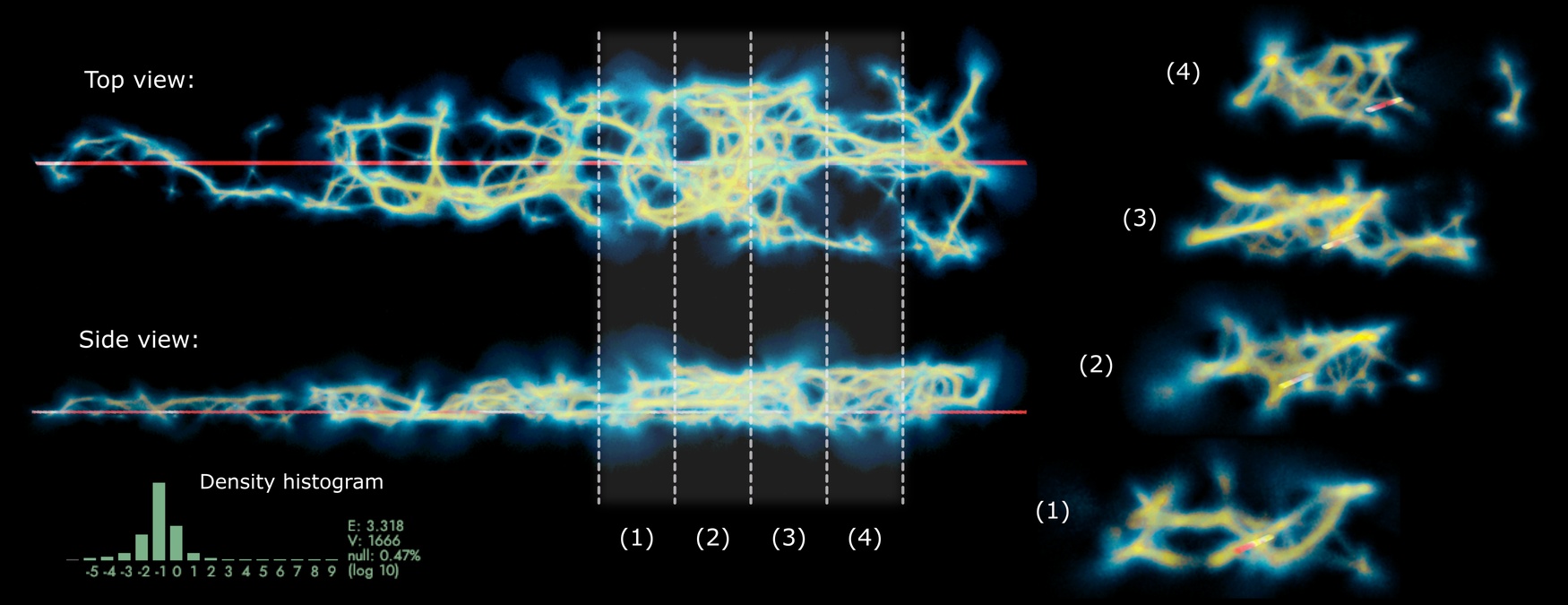

→ Volumetric Reconstruction for Interactive Analysis of the Cosmic Web

Winner of the Data Challenge @ IEEE Vis Astro 2020

Joseph N. Burchett, David Abramov, Oskar Elek, Angus G. Forbes

|

![[pdf]](./meta/pdf.png) Paper

Paper

![[avi]](./meta/avi.png) Video (CosmoVis)

Video (CosmoVis)

![[avi]](./meta/avi.png) Video with CC (Polyphorm)

Video with CC (Polyphorm)

![[htm]](./meta/htm.png) MCPM Code

MCPM Code

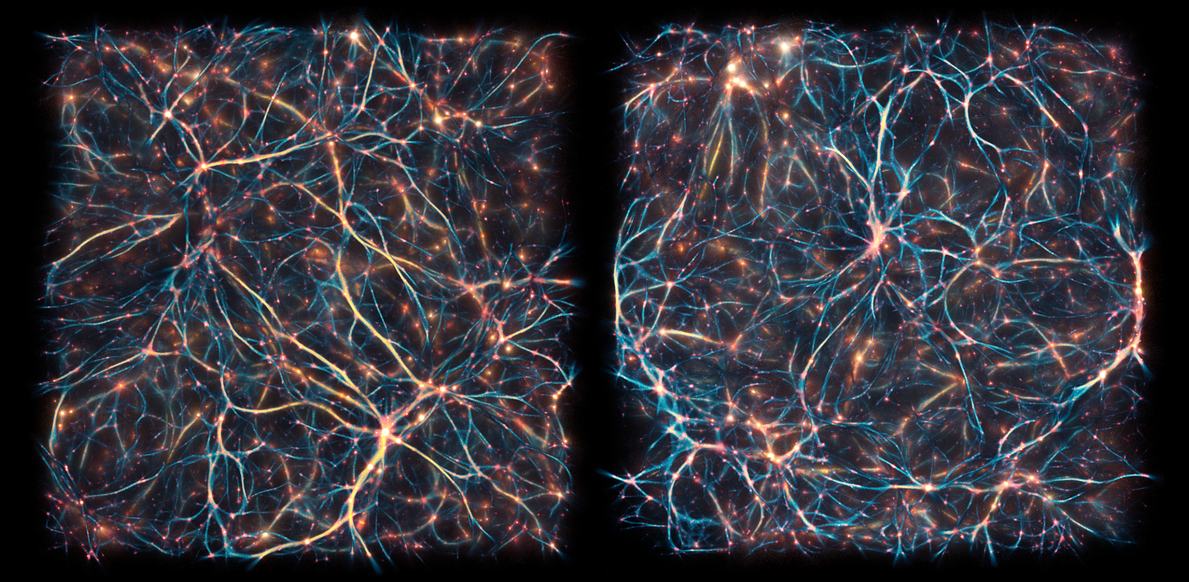

We present a multi-faceted study of the Illustris TNG-100 dataset at redshift z = 0, utilizing two interactive visual analysis tools: Polyphorm, which utilizes our Monte Carlo Physarum Machine (MCPM) algorithm to identify large scale structures of the Cosmic Web such as filaments and knots, and CosmoVis, which provides an interactive 3D visualization of gas, dark matter and stars in the intergalactic and circumgalactic medium (IGM/CGM). We provide a complex view of salient cosmological structures within TNG-100 and their relationships to the galaxies within them. From our MCPM Cosmic Web reconstruction using the positions and masses of TNG-100 halos, we quantify the environmental density of each galaxy in a manner sensitive to the filamentary structure. We demonstrate that at fixed galaxy mass, star formation is increasingly quenched in progressively denser environments. With CosmoVis, we reveal the temperature and density structure of the Cosmic Web, clearly demonstrating that more densely populated filaments and sheets are permeated with hotter IGM material, having T > 105.5 K, than more putative filaments (T around 104 K), bearing the imprint of feedback processes from the galaxies within. Additionally, we highlight a number of gas structures with interesting morphological or temperature/density characteristics for analysis with synthetic spectroscopy.

This work combines two different software tools -- Polyphorm and yet unreleased CosmoVis -- to provide a deep probe into the state-of-the-art simulated cosmological dataset Illustris TNG-100. We explore several modalities of the dataset using these two interactive 3D visualization tools, and present novel results illuminating the relationship between the galaxies' position in the Cosmic Web and their local properties (such as star formation rates and temperatures of their respective gas halos).

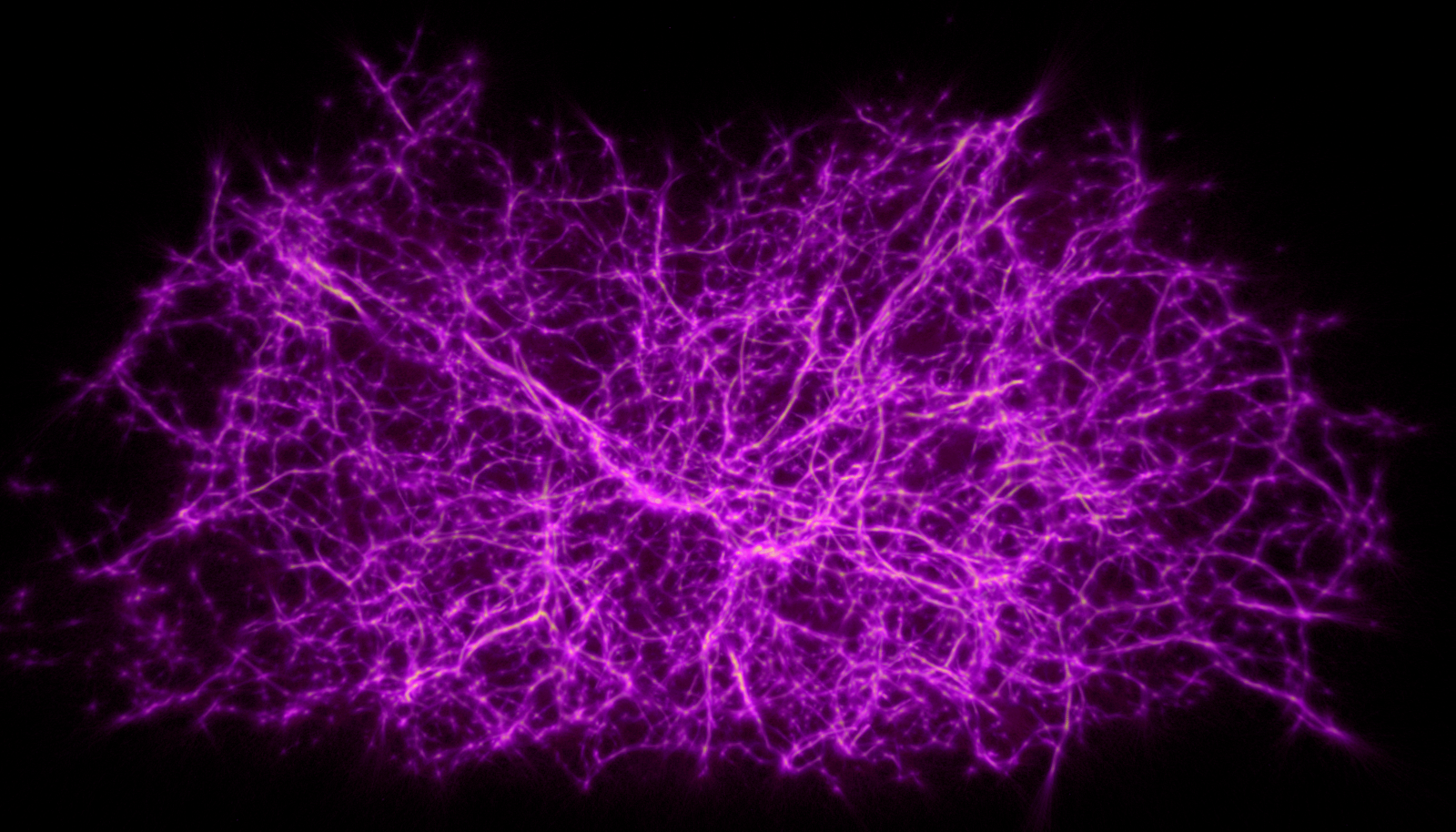

To provide an example, the image above shows Monte Carlo path tracing of the Cosmic Web structures reconstructed by Polyphorm, depicting the full TNG-100 dataset (left) and a slice about 20% of the full volume thickness (right). Here we interpret the input halos (red) as sources of illumination, and the reconstructed large-scale structures (blue-yellow) as a volumetric medium which not only emits, but also absorbs and scatters light. The combined effect of these interactions gives the dataset a better sense of depth and provides cues about the scale of the Cosmic Web features. For more quantitative results, be sure to check out our paper.

→ Polyphorm: Structural Analysis of Cosmological Datasets

via Interactive Physarum Polycephalum Visualization

@ IEEE Transactions on Visualization and Computer Graphics (presented at VIS 2020)

Oskar Elek, Joseph N. Burchett, J. Xavier Prochaska, Angus G. Forbes

|

![[htm]](./meta/htm.png) Paper (Arxiv)

Paper (Arxiv)

![[htm]](./meta/htm.png) Paper (TVCG)

Paper (TVCG)

![[htm]](./meta/htm.png) Video Summary

Video Summary

![[htm]](./meta/htm.png) Talk @ VIS

Talk @ VIS

![[htm]](./meta/htm.png) MCPM Code

MCPM Code

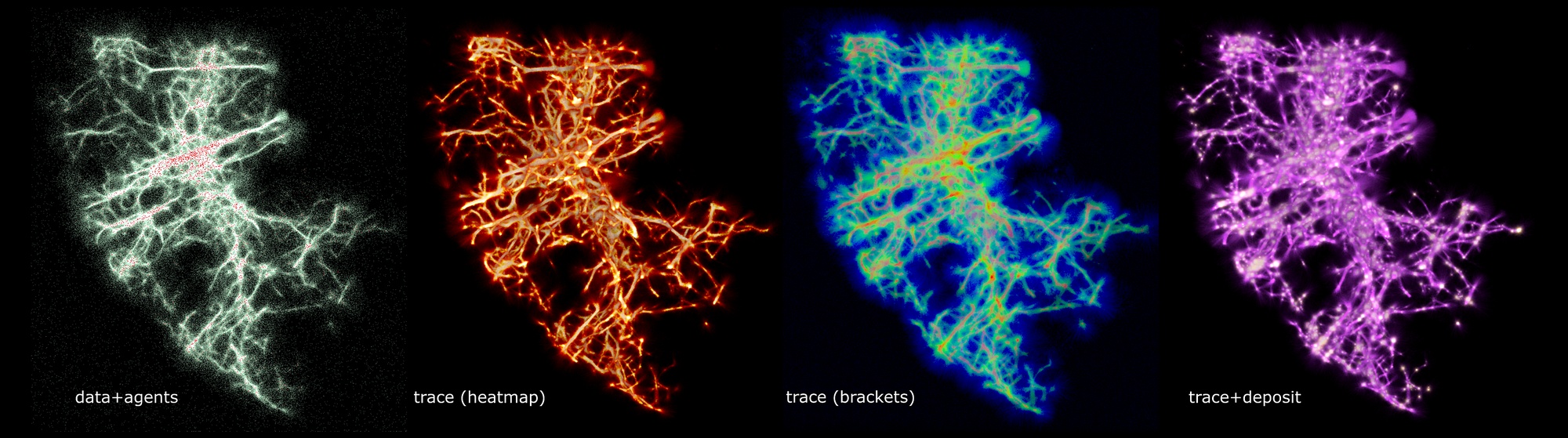

This paper introduces Polyphorm, an interactive visualization and model fitting tool that provides a novel approach for investigating cosmological datasets. Through a fast computational simulation method inspired by the behavior of Physarum polycephalum, an unicellular slime mold organism that efficiently forages for nutrients, astrophysicists are able to extrapolate from sparse datasets, such as galaxy maps archived in the Sloan Digital Sky Survey, and then use these extrapolations to inform analyses of a wide range of other data, such as spectroscopic observations captured by the Hubble Space Telescope. Researchers can interactively update the simulation by adjusting model parameters, and then investigate the resulting visual output to form hypotheses about the data. We describe details of Polyphorm’s simulation model and its interaction and visualization modalities, and we evaluate Polyphorm through three scientific use cases that demonstrate the effectiveness of our approach.

This paper summarizes over a year of work on our Polyphorm software: detailed account of the tasks driving our design decisions, full exposition of the MCPM model, and concise explanation of the astronomical use cases (more details on these can be found here and here).

This software has been born out of several lucky accidents and seemingly unlikely connections between several disciplines. We are very excited about the result. As we continue developing this tool, along with the underlying MCPM methodology, we would appreciate your feedback on its current state as well as suggestions for future improvements.

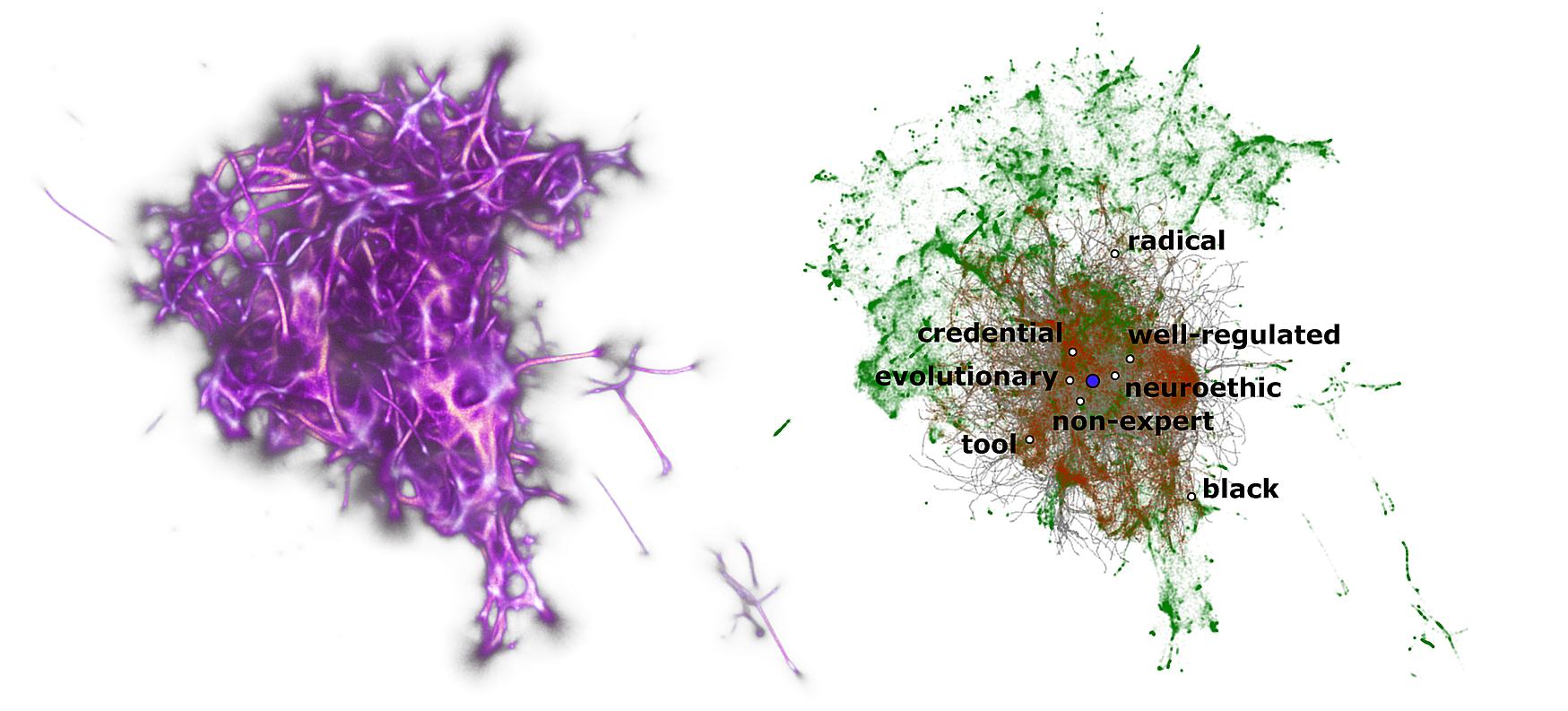

→ Bio-inspired Structure Identification in Language Embeddings

@ Visualization for Digital Humanitites (2020)

Hongwei (Henry) Zhou, Oskar Elek, Pranav Anand, Angus G. Forbes

|

Media report: Article by Melissa Weckerle

![[pdf]](./meta/pdf.png) Paper (Hi-Res)

Paper (Hi-Res)

![[htm]](./meta/htm.png) Paper (Arxiv)

Paper (Arxiv)

![[htm]](./meta/htm.png) Talk @ Vis4DH

Talk @ Vis4DH

![[htm]](./meta/htm.png) MCPM Code

MCPM Code

Word embeddings are a popular way to improve downstream performances in contemporary language modeling. However, the underlying geometric structure of the embedding space is not well understood. We present a series of explorations using bio-inspired methodology to traverse and visualize word embeddings, demonstrating evidence of discernible structure. Moreover, our model also produces word similarity rankings that are plausible yet very different from common similarity metrics, mainly cosine similarity and Euclidean distance. We show that our bio-inspired model can be used to investigate how different word embedding techniques result in different semantic outputs, which can emphasize or obscure particular interpretations in textual data.

We advocate for the view of language as an organic entity. Entity born out of necessity, that undergoes evolution, adapts in response to selective pressures, one that mutates and crosses over with others, and eventually dies, dissolving into those springing off it.

Our main inspiration is the work of the post-structuralist philosopher Gilles Deleuze, who adopted the bothanical term rhizome to define a universal conceptual image of society and the abstract artifacts it produces. As opposed to traditional 'tree-like' hierarchical views of human knowledge and organization, rhizomatic structures are defined by "ceaselessly established connections between semiotic chains, organizations of power, and circumstances relative to the arts, sciences, and social struggles". In other words, rhizomatic structures are such that allow for complex and distributed yet fundamentally interconnected views of human activity and its products.

This paper reports on the first, experimental results of our project. To make the highly abstract task of elucidating a rhizomatic structure within (the English) language practical, we rely on a chain of several computational models.

The first model are language embeddings, which use transformer neural networks to process large quantities of written text (such as the English Wikipedia corpus). The result of this processing is a set of points defined in a high-dimensional vector space (with typically 100s of dimensions). The points in this abstract space are called 'tokens': they represent individual words in the processed text, often associated with their peculiar semantics and usage patters. This is the space in which we search for the rhizomatic structures, relying on the findings that embeddings do encode semantic information about the processed text, such as the diverse contexts in which the individual words occur, functional analogies etc. However, this information is only present implicitly, as the embedding itself is devoid of any high-level structure.

The second model we employ is dimensionality reduction, specifically the UMAP method. This model performs a context-sensitive projection of the high-dimensional point cloud of tokens into a low-dimensional space, typically 2D or 3D. We opt for the latter, as 3D space allows for much more complex topologies as compared to 2D. This is important, as the purpose of this step is to express the embedding in a geometrically more manageable space, while preserving as much of the topological information of the original high-dimensional embedding space as possible.

The third and final model is MCPM, an agent-based method designed to reconstruct complex network structures out of discrete and potentially sparse sets of points. This bio-inspired method imitates the morphologies of Physarum polycephalum 'slime mold', one of the nature's most prolific pattern finders whose growth is underlied by the principles of optimal transport, such as the principle of least effort. MCPM has been successfully used in astronomy (e.g., here and here) to reconstruct the rhizome-like Cosmic Web networks; its application to language embedding data procudes similarly intriguing results, including the one in the image above, with more presented in our paper.

→ Disentangling the Cosmic Web Towards FRB 190608

@ The Astrophysical Journal (July 2020)

Sunil Simha, Joseph N. Burchett, J. Xavier Prochaska, Jay S. Chittidi, Oskar Elek, Nicolas Tejos et al.

|

![[pdf]](./meta/htm.png) Paper (Arxiv)

Paper (Arxiv)

![[pdf]](./meta/htm.png) Paper (ApJ)

Paper (ApJ)

![[htm]](./meta/htm.png) MCPM Code

MCPM Code

Fast radio burst (FRB) 190608 was detected by the Australian Square Kilometre Array Pathfinder (ASKAP) and localized to a spiral galaxy at zhost = 0.11778 in the Sloan Digital Sky Survey (SDSS) footprint. The burst has a large dispersion measure DMFRB = 339.8 pc/cm3) compared to the expected cosmic average at its redshift. It also has a large rotation measure RMFRB = 353 rad/m2) and scattering timescale (τ = 3.3 ms at 1.28 GHz). Chittidi et al. perform a detailed analysis of the ultraviolet and optical emission of the host galaxy and estimate the host DM contribution to be 110 ± 37 pc/cm3. This work complements theirs and reports the analysis of the optical data of galaxies in the foreground of FRB 190608 in order to explore their contributions to the FRB signal. Together, the two studies delineate an observationally driven, end-to-end study of matter distribution along an FRB sightline, the first study of its kind. Combining our Keck Cosmic Web Imager (KCWI) observations and public SDSS data, we estimate the expected cosmic dispersion measure DMcosmic along the sightline to FRB 190608. We first estimate the contribution of hot, ionized gas in intervening virialized halos DMhalos ≈ 7-28 pc/cm3). Then, using the Monte Carlo Physarum Machine methodology, we produce a 3D map of ionized gas in cosmic web filaments and compute the DM contribution from matter outside halos DMIGM ≈ 91-126 pc/cm3). This implies that a greater fraction of ionized gas along this sightline is extant outside virialized halos. We also investigate whether the intervening halos can account for the large FRB rotation measure and pulse width and conclude that it is implausible. Both the pulse broadening and the large Faraday rotation likely arise from the progenitor environment or the host galaxy.

We present the first complete study of cosmic matter distribution towards an extragalactic Fast Radio Burst, namely FRB 190608. We analyze the observed dispersion measure (DM) of the signal emitted by FRB 190608. The dispersion is caused by interactions of the FRB radiation with matter in the intervening entities: source galaxy and its halo, our galaxy and its halo, and finally the intergalactic medium contained in the Cosmic Web.

To estimate the density distribution of the intergalaxtic medium, we rely on the Monte Carlo Physarum Machine (MCPM) model, fitted to a narrow wedge of roughly 1000 galaxies captured by the Sloan Digital Sky Survey. With the help of MCPM, we demonstrate that the unusually high observed dispersion measure of FRB 190608 is explained by the presence of dense filamentary structures in the foreground of the FRB, at redshifts z ≈ 0.08 (see diagram above).

→ Monte Carlo Physarum Machine:

An Agent-based Model for Reconstructing Complex 3D Transport Networks

@ Artificial Life conference (July 2020)

Oskar Elek, Joseph N. Burchett, J. Xavier Prochaska, Angus G. Forbes

|

|

![[pdf]](./meta/htm.png) Paper (ALife)

Paper (ALife)

![[avi]](./meta/avi.png) Presentation Video

Presentation Video

![[htm]](./meta/htm.png) Code

Code

We introduce Monte Carlo Physarum Machine: a dynamic computational model designed for reconstructing complex transport networks. MCPM extends existing work on agent-based modeling of Physarum polycephalum with a probabilistic formulation, making it suitable for 3D reconstruction and visualization problems. Our motivation is estimating the distribution of the intergalactic medium -- the Cosmic web, which has so far eluded full spatial mapping. MCPM proves capable of this task, opening up a way towards answering a number of open astrophysical and cosmological questions.

This short paper is the first standalone exposition of the Monte Carlo Physarum Machine (MCPM) model, which we previously used in the context of astronomy for mapping the Cosmic web. As a quick introduction to the model, we sketch its usage and design rationale. For a full dive into the wonders of MCPM, stay tuned for the full paper in the upcoming months. Until then, how about checking out one of the videos showcasing our little virtual creature?

We designed a computer simulation inspired by how the Physarum polycephalum 'slime mold' grows and explores its environment in search for food. Physarum is known for its ability for creating transport networks out of its bodymass. These networks cover all the available food sources and yet spend the least possible amount of biological material. This property is inherited by our simulation. We show that if we 'feed' data to the virtual Physarum and interpret them as food distributed in space, our simulation will grow efficient networks connecting all the data together! We use this behavior to reconstruct the Cosmic web: the biggest network known to science that connects galaxies together and transports gas between them.

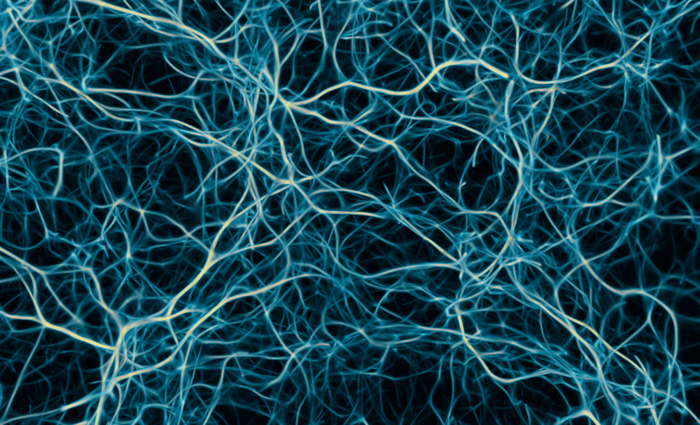

→ Revealing the Dark Threads of the Cosmic Web

@ The Astrophysical Journal Letters (March 2020)

Joseph N. Burchett, Oskar Elek, Nicolas Tejos, J. Xavier Prochaska, Todd M. Tripp, Rongmon Bordoloi, Angus G. Forbes

|

Media reports (primary): UC Santa Cruz · Hubble · NASA · ESA · HST · Planetary Society · Popular Science Ars Technica

Media reports (secondary): Sci Show · Poetry of Science · Seeker · Reddit · Inverse · Anton Petrov · Universe Today · Astronomy · Discover · Medium · Phys.org · Science News · Science Daily · Space · SciNews · Enterpreneur Fund · Bioengineer · Paco Jariego · Science Codex · Daily Space · Cosmic Companion

Thanks to the journalists who have covered our work!

Project files:

![[htm]](./meta/htm.png) Article (ApJL)

Article (ApJL)

![[htm]](./meta/htm.png) Article (Arxiv)

Article (Arxiv)

![[htm]](./meta/htm.png) Code

Code

![[pdf]](./meta/avi.png) Video (SDSS)

Video (SDSS)

![[pdf]](./meta/avi.png) Video (B-P)

Video (B-P)

![[pdf]](./meta/bib.png) Bibtex entry

Bibtex entry

Modern cosmology predicts that matter in our Universe has assembled today into a vast network of filamentary structures colloquially termed the Cosmic Web. Because this matter is either electromagnetically invisible (i.e., dark) or too diffuse to image in emission, tests of this cosmic web paradigm are limited. Widefield surveys do reveal web-like structures in the galaxy distribution, but these luminous galaxies represent less than 10% of baryonic matter. Statistics of absorption by the intergalactic medium (IGM) via spectroscopy of distant quasars support the model yet have not conclusively tied the diffuse IGM to the web. Here, we report on a new method inspired by the Physarum polycephalum slime mold that is able to infer the density field of the Cosmic Web from galaxy surveys. Applying our technique to galaxy and absorption-line surveys of the local Universe, we demonstrate that the bulk of the IGM indeed resides in the Cosmic Web. From the outskirts of Cosmic Web filaments, at approximately the cosmic mean matter density (ρm) and about 5 virial radii from nearby galaxies, we detect an increasing HI absorption signature towards higher densities and the circumgalactic medium, to about 200 ρm. However, the absorption is suppressed within the densest environments, suggesting shock-heating and ionization deep within filaments and/or feedback processes within galaxies.

Across dank, shaded

habitats of cosmic intent

specks of light flicker

into life.

Emerging from chaos,

filaments of matter

weave their nets;

unclassifiable structures

that stretch

across the emptiness

as ethereal webs that

glisten in the dewy dawn.

Barely trodden paths

glow faintly in the dark;

celestial causeways that

trace the congruence of

our galactic ancestry.

Their hidden alleyways

conspicuous by the

mass of what we

cannot see;

the weight of their

neglect

calling to us

from across the void.

Blindly, we turn our

eyes to the sky,

searching for breadcrumbs

between the pathways,

oblivious to knowledge

that has always been with us:

the map of our universe

embraced within a single cell.

-- Sam Illingworth, March 13, 2020

We found evidence of structural similarity between the shape of Physarum polycephalum (the 'slime mold') and the Cosmic Web, a network of intergalactic gas and dark matter that is arguably the largest distinct structure in the known Universe. Based on this similarity we developed a specialized machine-learning algorithm (inspired by the Physarum polycephalum) that can learn the shape of the Cosmic Web. We train this algorithm on data from cosmological simulations ran on massive supercomputers, and then use it to reconstruct a portion of the Cosmic Web from 37,000 galaxies observed by the Hubble space telescope. The result is a first-of-its-kind map of the Cosmic Web, which allows us to ask new questions about the distribution of intergalactic medium and dark matter throughtout the universe.

→ Learning Patterns in Sample Distributions for Monte Carlo Variance Reduction

@ ArXiv 2019

Oskar Elek, Manu M. Thomas, Angus G. Forbes

|

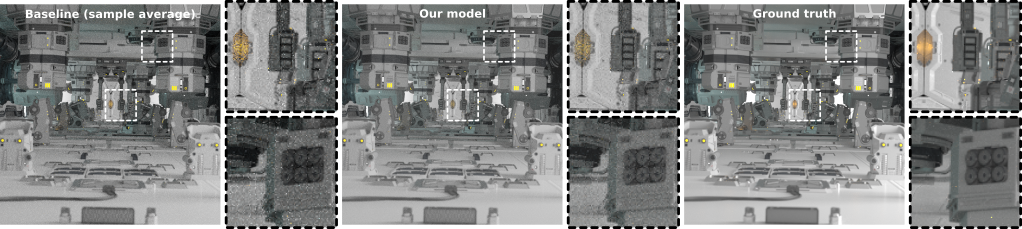

This paper investigates a novel a-posteriori variance reduction approach in Monte Carlo image synthesis. Unlike most established methods based on lateral filtering in the image space, our proposition is to produce the best possible estimate for each pixel separately, from all the samples drawn for it. To enable this, we systematically study the per-pixel sample distributions for diverse scene configurations. Noting that these are too complex to be characterized by standard statistical distributions (e.g. Gaussians), we identify patterns recurring in them and exploit those for training a variance-reduction model based on neural nets. In result, we obtain numerically better estimates compared to simple averaging of samples. This method is compatible with existing image-space denoising methods, as the improved estimates of our model can be used for further processing. We conclude by discussing how the proposed model could in future be extended for fully progressive rendering with constant memory footprint and scene-sensitive output.

Physically based rendering as of today is built, virtually without exceptions, on the stochastic Monte Carlo (MC) paradigm. This stands to reason: MC is a generally applicable framework capable of producing numerical solutions to complex rendering scenarios. On the flip side, the cost of this universal applicability is the ever-present noise in the solution -- or in other words, the variance of the employed estimator.

Most existing approaches to remove the noise, this undesired error in the signal, are based on the image-space paradigm adapted to MC rendering from signal processing. We advocate taking a step back from this approach, as valuable information from the costly MC samples is neglected in favor of using statistical aggregates of these samples instead.

This paper represents only an initial step in this effort. We present a detailed study of the per-pixel sample distributions for several rendering scenarios, as well as a tool that can enable designers and developers to visualize these distributions produced by their own renderer. Based on the knowledge from this study, we present a prototype machine-learning approach to model the patterns implicitly contained in these noisy distributions. The output of our model are improved estimates for each pixel of the rendered image, surpassing the standard estimates (sample averages) in over 90% of cases.

Ultimately, we argue for the following. The variance of an MC-based rendering is a function of many parts: the physics of light transport, the design of the simulation algorithm, and the rendered scene. To reduce the amount of variance (that is, the error) in the resulting solution, we optimally need to use all the information known about these parts -- both a theoretical analysis of the physics-based numerical solver, and a data-driven model which incorporates the scene-specific knowledge. This is the direction we plan to follow in this project.

We visualize -- and use artificial neural networks to learn -- the shapes of statistical sample distributions in Monte Carlo rendering, and apply that knowledge to produce improved (denoised) images from small sample sets.

→ Volume Path Guiding Based on Zero-Variance Random Walk Theory

@ ACM Transactions on Graphics (presented at SIGGRAPH 2019)

Sebastian Herholz, Yangyang Zhao, Oskar Elek, Derek Nowrouzezahrai, Hendrik Lensch, Jaroslav Krivanek

|

![[htm]](./meta/htm.png) Project page

Project page

![[htm]](./meta/htm.png) Paper and Talk

Paper and Talk

![[bib]](./meta/bib.png) Bibtex entry

Bibtex entry

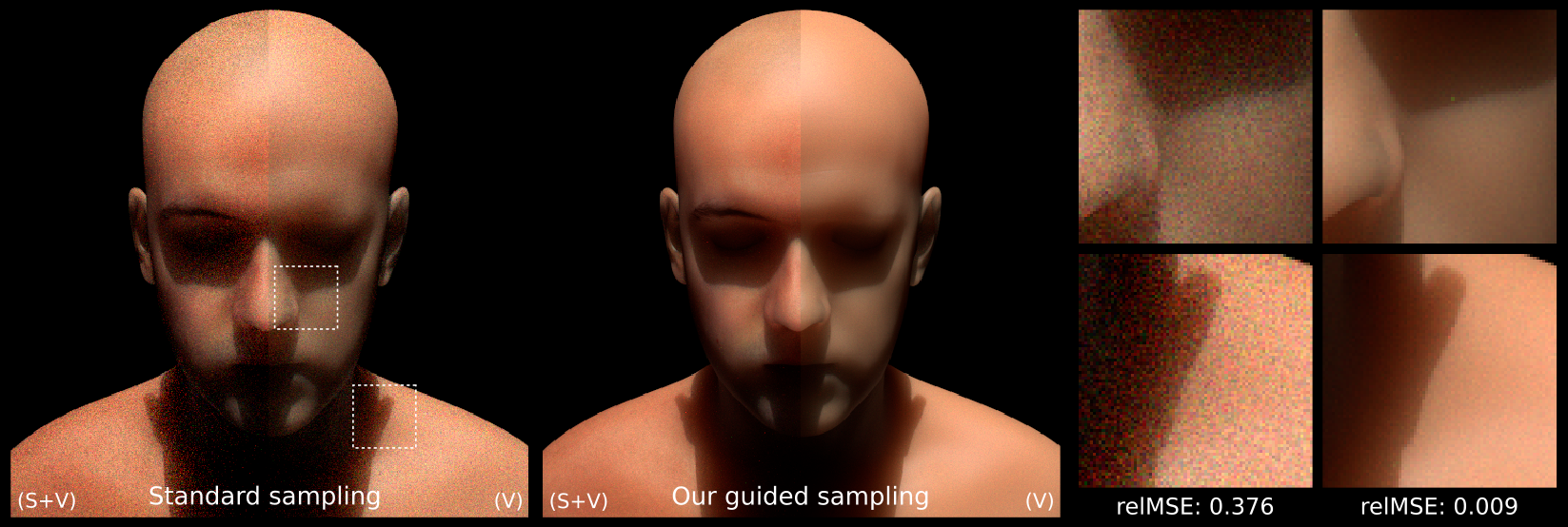

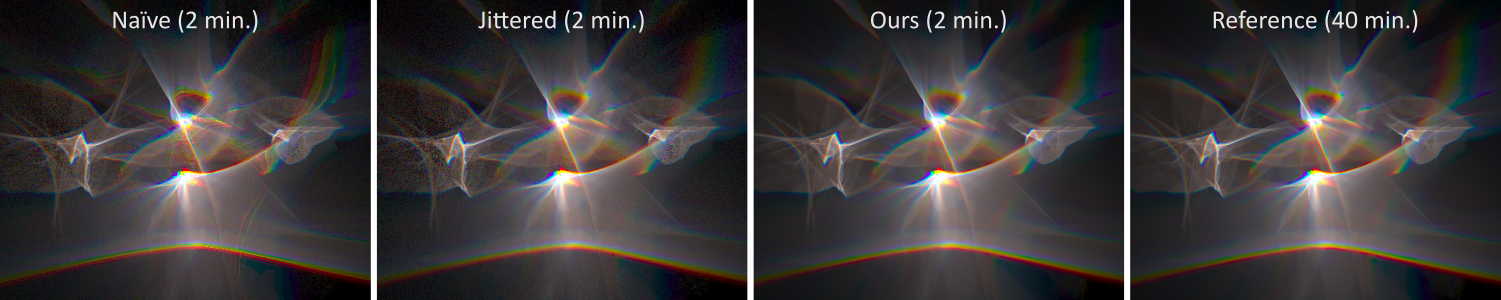

The efficiency of Monte Carlo methods, commonly used to render participating media, is directly linked to the manner in which random sampling decisions are made during path construction. Notably, path construction is influenced by scattering direction and distance sampling, Russian roulette, and splitting strategies. We present a consistent suite of volumetric path construction techniques where all these sampling decisions are guided by a cached estimate of the adjoint transport solution. The proposed strategy is based on the theory of zero-variance path sampling schemes, accounting for the spatial and directional variation in volumetric transport. Our key technical contribution, enabling the use of this approach in the context of volume light transport, is a novel guiding strategy for sampling the particle collision distance proportionally to the product of transmittance and the adjoint transport solution (in-scattered radiance). Furthermore, scattering directions are likewise sampled according to the product of the phase function and the incident radiance estimate. Combined with guided Russian roulette and splitting strategies tailored to volumes, we demonstrate about an order-of-magnitude error reduction compared to standard unidirectional methods. Consequently, our approach can render scenes otherwise intractable for such methods, while still retaining their simplicity (compared to bidirectional methods).

This project is a natural conclusion of the line of work that our group has been focusing for several years now. Our mission here has been to 1) unify multiple partial methods (especially this, this and this) concerned with Bayesian path guiding under the framework of zero-variance sampling (known primarily from neutron transport), and 2) apply the resulting methodology to render volumetric participating media. The result is an unbiased method that is robust in a number of different media configurations and optical properties, and easily surpasses standard (unguided) path tracing by an order of magnitude in terms of the resulting solution error (see the image above for an example).

The key advantage of this approach is that it encapsulates all of the stochastic decisions needed by a Monte Carlo solver in volumetric media. All of these are based on the same cached representation of the adjoint radiance solution, and formulated in the zero-variance framework (which can be seen as a generalization of importance sampling but with global decisions). This leads to guided volumetric path tracing -- an algorithm which is theoretically optimal and in practice limited only by the accuracy of the cached adjoint solution.

Accurate visual simulation of volumetric materials (such as clouds, smoke or skin) is very time-consuming, taking hours or even days for a single realistic image. To speed up that process, we have developed an algorithm that 'guides' the simulation towards light sources, thus preventing it from having to search for them randomly (which is currently the standard way). Incorporated into a 3D rendering software, our method would save the time, computational resources, and energy of its user, for instance a visual artist or an architect.

→ Towards a Principled Kernel Prediction for Spatially Varying BSSRDFs

@ EG Workshop on Material Appearance 2018

Oskar Elek and Jaroslav Krivanek

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Paper

Paper

![[pdf]](./meta/pdf.png)

![[pptx]](./meta/ppt.png) Conference slides

Conference slides

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

While the modeling of sub-surface translucency using homogeneous BSSRDFs is an established industry standard, applying the same approach to heterogeneous materials is predominantly heuristical. We propose a more principled methodology for obtaining and evaluating a spatially varying BSSRDF, on the basis of the volumetric sub-surface structure of the simulated material. The key ideas enabling this are a simulation-data driven kernel for aggregating the spatially varying material parameters, and a structure-preserving decomposition of the subsurface transport into a local and a global component. Our current results show significantly improved accuracy for planar materials with spatially varying scattering albedo, with added discussion about extending the approach for general geometries and full heterogeneity of the material parameters.

One of the key challenges we faced in the texture fabrication project is an efficient prediction of the optimized object's appearance. The used prediction algorithm -- path tracing -- is of course highly accurate, but comes at a cost that's arguably prohibiting the use of our method in practical settings (i.e., where the time budget for preparing a single print job is counted in minutes rather than hours). It is however the accuracy of the prediction method that yields the high quality reproduction we've been able to achieve.

Thus the focus of this project is to design a prediction method that achieves visually comparable accuracy at a fraction of the cost of a full, path-traced solution. Our current results are an early prototype, formulated as a spatially varying BSSRDF which can be applied to simulate sub-surface scattering in translucent, heterogeneous materials. Despite its early stage, the method already produces results that are visually accurate (see the image above), and clearly challenge the current state-of-the-art algorithms that can be applied in this setting.

Realistically simulating how translucent ('waxy') materials look is computationally expensive, because photons can scatter under their surface many, possibly thousands of times. Our model specifically designed for heterogeneous materials (for instance stone, plastic, or organic matter) can describe their properties statistically, and therefore skip a large part of the costly simulation. One way to imagine this is as an 'oracle' which tells the simulation what the material approximately looks like underneath its surface, so that it's not necessary to explicitly trace the path of each individual photon anymore.

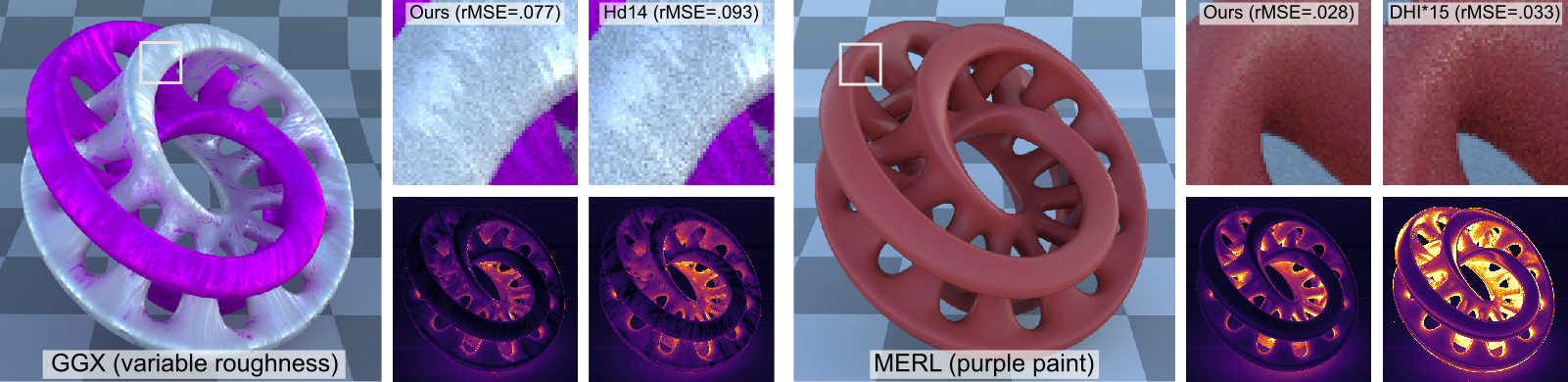

→ A Unified Framework for Efficient BRDF Sampling based on Parametric Mixture Models

@ EG Symposium on Rendering 2018

Sebastian Herholz, Oskar Elek, Jens Schindel, Jaroslav Krivanek, Hendrik Lensch

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Paper

Paper

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

Virtually all existing analytic BRDF models are built from multiple functional components (e.g., Fresnel term, normal distribution function, etc.). This makes accurate importance sampling of the full model challenging, and so current solutions only cover a subset of the model's components. This leads to sub-optimal or even invalid proposed directional samples, which can negatively impact the efficiency of light transport solvers based on Monte Carlo integration. To overcome this problem, we propose a unified BRDF sampling strategy based on parametric mixture models (PMMs). We show that for a given BRDF, the parameters of the associated PMM can be defined in smooth manifold spaces, which can be compactly represented using multivariate B-Splines. These manifolds are defined in the parameter space of the BRDF and allow for arbitrary, continuous queries of the PMM representation for varying BRDF parameters, which further enables importance sampling for spatially varying BRDFs. Our representation is not limited to analytic BRDF models, but can also be used for sampling measured BRDF data. The resulting manifold framework enables accurate and efficient BRDF importance sampling with very small approximation errors.

This project has been born already when working on our product sampling paper back in 2016. We were motivated by the fact that to enable the product sampling, it is necessary to precompute and store large databases of tabulated parametric mixtures for every material (BRDF) in the scene and its individual configurations. This gets impractical or even straight-up prohibitive, once the scene contains 100s of materials, or even worse, spatially varying ones (see the image above).

The method proposed here answers this problem by creating an analytic meta-fit over the BRDF hyper-parametric space. As a representation we use parametric mixture models, such as the Gaussian or skewed Gaussian mixtures. This means that for every material and its configuration, we can obtain -- in a closed form -- the corresponding parametric mixture which then serves as a tightly fitting density function for importance sampling.

There are several advantages to this approach. First, the parametric mixture model representation is agnostic to the particular features of the fitted BRDF, so that different models (physically based, empirical, or even measured) can be sampled within the same unified method. Second, the representation is compatible with our product importance sampling, enabling high quality rendering of global illumination in difficult scenes, as intended. Third, the proposed representation is actually versatile enough that we numerically exceed the sampling quality compared to state-of-the-art dedicated sampling methods.

The only shortcoming is the current lack of support for anisotropic BRDFs, being caused by their higher dimensionality. We are hoping to address this in future, by designing a more robust fitting method that scales to such high-dimensional functions.

To make the rendering of realistic images efficient, the state-of-the-art simulations based on ray tracing have to adapt their behavior to each particular material used in the scene. This is difficult since every material can interact with light very differently. Here we propose a solution around this problem: to mathematically describe the materials using their actual 'visual' features, instead of doing so from their low-level physical properties. As a result, not only we make the simulation faster, but also make the life of its developers easier -- since they don't need to optimize every simgle material individually.

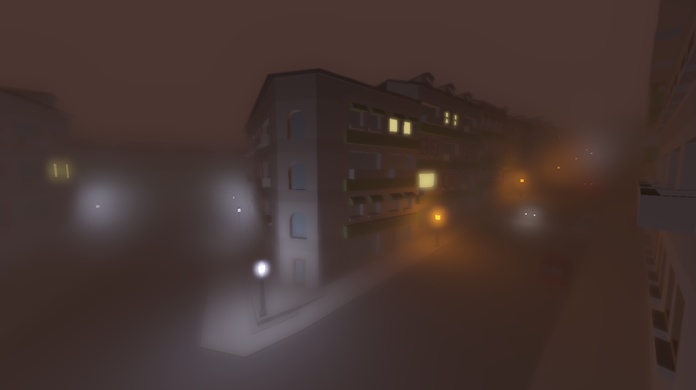

→ Real-time Light Transport in Analytically Integrable Quasi-heterogeneous Media

Best Paper @ Central Europen Seminar on CG 2018

Tomas Iser, sup. Oskar Elek

|

|

|

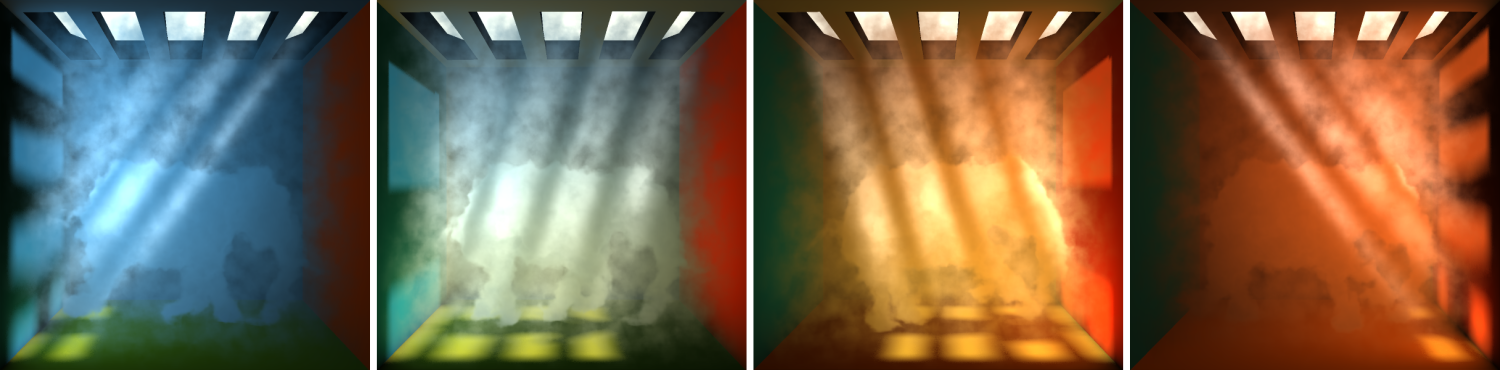

Our focus is on the real-time rendering of large-scale volumetric participating media, such as fog. Since a physically correct simulation of light transport in such media is inherently difficult, the existing real-time approaches are typically based on low-order scattering approximations or only consider homogeneous media. We present an improved image-space method for computing light transport within quasi-heterogeneous, optically thin media. Our approach is based on a physically plausible formulation of the image-space scattering kernel and analytically integrable medium density functions. In particular, we propose a novel, hierarchical anisotropic filtering technique tailored to the target environments with inhomogeneous media. Our parallelizable solution enables us to render visually convincing, temporally coherent animations with fog-like media in real time, in a bounded time of only milliseconds per frame.

This project presents an image-space method to simulate physically-based multiple scattering at real-time speeds. It picks up where our previous project on this topic left off. The result is a marked improvement over the original method (improved filtering and support for variable-density media), yet still runs at around 5 ms/frame on modest hardware (GTX 660). Tomas Iser (student at our group) has done a great job here, and it shows: his BSc. thesis had received the Dean's award, and the subsequent paper was awarded "Best Paper", "Best Video" and "2nd Best Talk" at CESCG (non peer-reviewed student conference).

We look how far pixels are from the camera, and based on that calculate how many tiny water or dust particles the light in each pixel hits. We then weaken and blur that light as much as optical theory tells us. This is fast enough, so that it's possible to simulate believable fog or dust in 3D computer games.

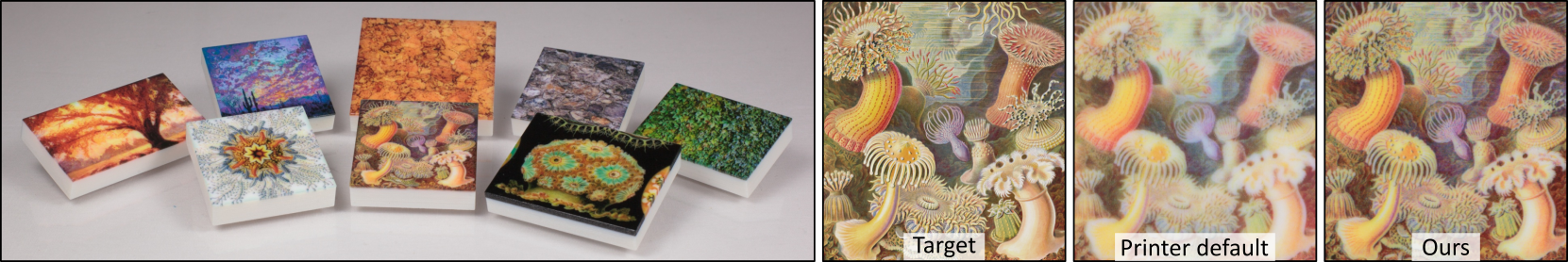

→ Scattering-aware Texture Reproduction for 3D Printing

@ ACM SIGGRAPH Asia 2017

Oskar Elek*, Denis Sumin*, Ran Zhang, Tim Weyrich, Karol Myszkowski, Bernd Bickel, Alexander Wilkie, Jaroslav Krivanek

(*joint first authors)

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Article

Article

![[pdf]](./meta/htm.png) Article (ACM)

Article (ACM)

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

Color texture reproduction in 3D printing commonly ignores volumetric light transport (cross-talk) between surface points on a 3D print. Such light diffusion leads to significant blur of details and color bleeding, and is particularly severe for highly translucent resin-based print materials. Given their widely varying scattering properties, this cross-talk between surface points strongly depends on the internal structure of the volume surrounding each surface point. Existing scattering-aware methods use simplified models for light diffusion, and often accept the visual blur as an immutable property of the print medium. In contrast, our work counteracts heterogeneous scattering to obtain the impression of a crisp albedo texture on top of the 3D print, by optimizing for a fully volumetric material distribution that preserves the target appearance. Our method employs an efficient numerical optimizer on top of a general Monte-Carlo simulation of heterogeneous scattering, supported by a practical calibration procedure to obtain scattering parameters from a given set of printer materials. Despite the inherent translucency of the medium, we reproduce detailed surface textures on 3D prints. We evaluate our system using a commercial, five-tone 3D print process and compare against the printer's native color texturing mode, demonstrating that our method preserves high-frequency features well without having to compromise on color gamut.

This project started by asking a modest question: "can we counteract the negative effects of sub-surface scattering on the quality of textured 3D prints?". These effects are typically loss of fine detail and undesired color blending. Two years later, we ended up developing a complete prototype pipeline for color reproduction on photo-polymer 3D printers, touching on the subjects of optical characterization of translucent materials, predictive rendering, color management and separation, nonlinear optimization and appearance fabrication itself.

Why is this such a difficult problem? Well as usual, multiple reasons. First, photo-polymer materials are inherently translucent, which is an essential property when it comes to enabling the UV-light curing process. The issue thus cannot be solved by simply making the materials optically denser. Second, the structure of the problem is much more difficult than other, seemingly similar ones (such as image sharpening/enhancement, or cross-talk compensation in stereo projectors/displays). For instance, from the surface perspective of the volumetric light transport, the resulting point spread function has a large long-tailed support, and moreover a significant spatial and directional variation. The unwanted effects also happen ex post, that is, only after the object is fabricated; this means that any compensation has to be capable of quantitative prediction before the object is optimized and can be realized physically.

The main achievement of our effort is the demonstration that it is indeed possible to compansate for unwanted effects of material translucency without modifying the materials themselves. That is, given the necessary information as well as computational resources. Many questions still remain unanswered though: beyond the obvious issues of computational efficiency and adaptation of the pipeline to arbitrary geometries, we lack the understanding of human perception as far as heterogeneous translucent objects go. What are the cues that we use to distinguish translucent objects, and how to optimize for minimizing that perception?

Check the project page for additional materials (detailed description of the measurement methodology, conference slides etc.).

We wanted to improve how 3D printers reproduce textures and colors on the surface of manufactured objects. The algorithm we invented simulates how light interacts with virtual 3D prints, and then compensates for any unwanted effects on the surface. These are color mismatch and/or blurring, and happen because the plastic printed materials are -- and need to be! -- translucent ('waxy').

→ Product Importance Sampling for Light Transport Path Guiding

2nd Best Paper @ EG Symposium on Rendering 2016

Sebastian Herholz, Oskar Elek, Jiri Vorba, Hendrik Lensch, Jaroslav Krivanek

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Article [↑]

Article [↑]

![[pdf]](./meta/pdf.png) Conference slides

Conference slides

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

The efficiency of Monte Carlo algorithms for light transport simulation is directly related to their ability to importance-sample the product of the illumination and reflectance in the rendering equation. Since the optimal sampling strategy would require knowledge about the transport solution itself, importance sampling most often follows only one of the known factors -- BRDF or an approximation of the incident illumination. To address this issue, we propose to represent the illumination and the reflectance factors by the Gaussian mixture model (GMM), which we fit by using a combination of weighted expectation maximization and non-linear optimization methods. The GMM representation then allows us to obtain the resulting product distribution for importance sampling on-the-fly at each scene point. For its efficient evaluation and sampling we preform an up-front adaptive decimation of both factor mixtures. In comparison to state-of-the-art sampling methods, we show that our product importance sampling can lead to significantly better convergence in scenes with complex illumination and reflectance.

Given the recent revival of light path guiding, the natural question to ask is how to utilize the knowledge about the radiance distribution in a scene to achieve optimal path space sampling. As the theory of zero-variance sampling implies, the optimal strategy is to sample according to the full illumination integrand of the rendering equation, which translates to the product of the incident radiance and the material BRDF at any given location.

This paper proposes a solution to achieve just that. We make use of the fact that the Gaussian distribution allows deriving a product distribution in closed-form, and that the resulting distribution is again Gaussian. We therefore learn the distributions in the form of Gaussian mixtures, for both the incident radiance and BRDFs in the simulated scene, and efficiently sample according to these. Introducing only a mild overhead, this sampling strategy is the first practical method to sample proportionally to the full illumination integrand, and is optimal up to the approximation error caused by our discrete representation. The work has received the "2nd Best Student Paper" award.

If you want to render photo-realistic images of 3D scenes, all modern algorithms that can do that also suffer from some kind of error. For algorithms based on ray tracing, the error is visible as 'noise' in the computed image. The theory of light transport tells us that, to minimize the noise, we always need to shoot rays in the directions where light comes from but also where materials reflect most light to -- at the same time! Our work allows us to do that optimally, based on well designed statistical approximations.

→ Efficient Methods for Physically-based Rendering of Participating Media

PhD Thesis @ Max Planck Institut Informatik 2015

Oskar Elek, sup. Tobias Ritschel and Hans-Peter Seidel

|

![[pdf]](./meta/pdf.png) Thesis text [↑]

Thesis text [↑]

![[pdf]](./meta/pdf.png)

![[pptx]](./meta/ppt.png) Defense slides

Defense slides

This thesis proposes several novel methods for realistic synthesis of images containing participating media. This is a challenging problem, due to the multitude and complexity of ways how light interacts with participating media, but also an important one, since such media are ubiquitous in our environment and therefore are one of the main constituents of its appearance. The main paradigm we follow is designing efficient methods that provide their user with an interactive feedback, but are still physically plausible.

The presented contributions have varying degrees of specialisation and, in a loose connection to that, their resulting efficiency. First, the screen-space scattering algorithm simulates scattering in homogeneous media, such as fog and water, as a fast image filtering process. Next, the amortised photon mapping method focuses on rendering clouds as arguably one of the most difficult media due to their high scattering anisotropy. Here, interactivity is achieved through adapting to certain conditions specific to clouds. A generalisation of this approach is principal-ordinates propagation, which tackles a much wider class of heterogeneous media. The resulting method can handle almost arbitrary optical properties in such media, thanks to a custom finite-element propagation scheme. Finally, spectral ray differentials aim at an efficient reconstruction of chromatic dispersion phenomena, which occur in transparent media such as water, glass and gemstones. This method is based on analytical ray differentiation and as such can be incorporated to any ray-based rendering framework, increasing the efficiency of reproducing dispersion by about an order of magnitude.

All four proposed methods achieve efficiency primarily by utilising high-level mathematical abstractions, building on the understanding of the underlying physical principles that guide light transport. The methods have also been designed around simple data structures, allowing high execution parallelism and removing the need to rely on any sort of preprocessing. Thanks to these properties, the presented work is not only suitable for interactively computing light transport in participating media, but also allows dynamic changes to the simulated environment, all while maintaining high levels of visual realism.

Blood, sweat and tears: the definitive compilation of my doctoral work at MPI. Now with an informal introduction, 30 pages of relevant rendering and optics background, and extended discussion of what follows from all this. Please enjoy and leave a like ;)

→ Spectral Ray Differentials

Best Student Paper @ EG Symposium on Rendering 2014

Oskar Elek, Pablo Bauszat, Tobias Ritschel, Marcus Magnor, Hans-Peter Seidel

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Article [↑]

Article [↑]

![[pdf]](./meta/pdf.png) Derivation

Derivation

![[pdf]](./meta/pdf.png) Raw images

Raw images

![[pdf]](./meta/pdf.png) Slides

Slides

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

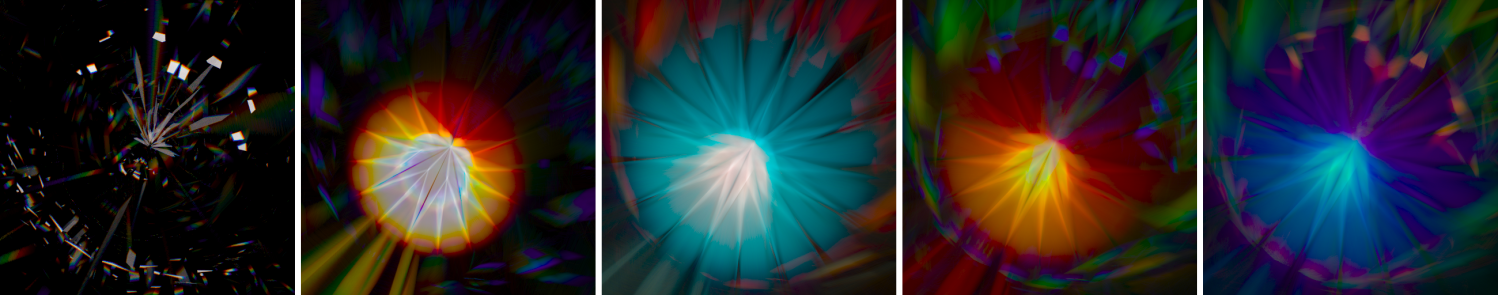

Light refracted by a dispersive interface leads to beautifully colored patterns that can be rendered faithfully with spectral Monte-Carlo methods. Regrettably, results often suffer from chromatic noise or banding, requiring high sampling rates and large amounts of memory compared to renderers operating in some trichromatic color space. Addressing this issue, we introduce spectral ray differentials, which describe the change of light direction with respect to changes in the spectrum. In analogy with the classic ray and photon differentials, this information can be used for filtering in the spectral domain. Effectiveness of our approach is demonstrated by filtering for offline spectral light and path tracing as well as for an interactive GPU photon mapper based on splatting. Our results show considerably less chromatic noise and spatial aliasing while retaining good visual similarity to reference solutions with negligible overhead in the order of milliseconds.

Caustics are image-like phenomena resulting purely from a variable distribution of light caused by refraction -- for instance under a glass of wine or at the bottom of a swimming pool. While the traditional challenge in the rendering community has been efficiently solving for the light transport as such, we focused on the phenomenon that goes hand-to-hand with refraction: dispersion of light. While we all know dispersion in the form of rainbows, this phenomenon occurs virtually on all refractive objects due to spectral variability of the refractive index.

Our work here describes a reconstruction approach, which is based on tracing partial derivatives with respect to a change of light frequency; we call these 'spectral differentials', following an already established nomenclature in rendering. Spectral differentials inform the renderer about the direction in which the dispersion predominantly occurs, so that a higher-quality reconstruction can be performed. This is applicable in offline Monte-Carlo rendering, but also in the real-time domain (as demonstrated in this video). This work has received the "Best Student Paper" award at EGSR 2014.

→ Progressive Spectral Ray Differentials

@ Vision, Modelling and Visualization workshop 2014

Oskar Elek, Pablo Bauszat, Tobias Ritschel, Marcus Magnor, Hans-Peter Seidel

|

![[pdf]](./meta/pdf.png) Article

Article

![[pdf]](./meta/pdf.png) Conference slides

Conference slides

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

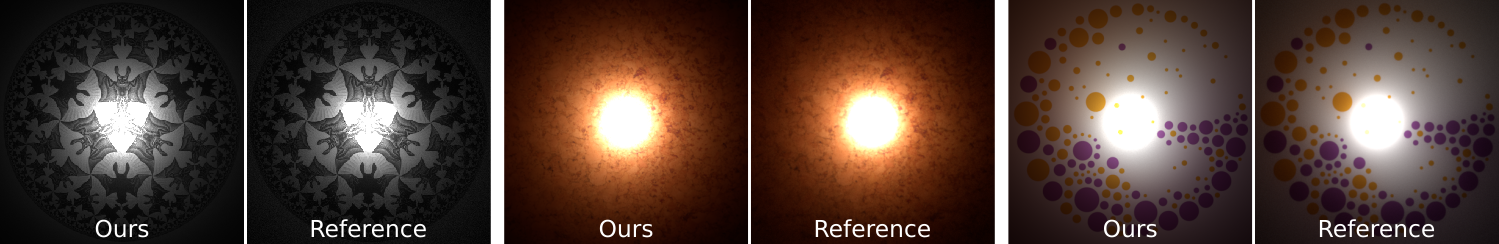

Light travelling though refractive objects can lead to beautiful colourful illumination patterns resulting from dispersion on the object interfaces. While this can be accurately simulated by stochastic Monte-Carlo methods, their application is costly and leads to significant chromatic noise. This is greatly improved by applying spectral ray differentials, however, at the cost of introducing bias into the solution. We propose progressive spectral ray differentials, adapting concepts from other progressive Monte-Carlo methods. Our approach takes full advantage of the variance-reduction properties of spectral ray differentials but progressively converges to the correct, unbiased solution in the limit.

An extension of the above work. As with other reconstruction methods assigning a finite support to the phenomenon in question, our basic method, too, suffers from spatial bias. This extension addresses this issue: inspired by progressive photon mapping approaches, we systematically shrink the spatial support defined by the traced differentials, so that in the limit the solution consistently converges to the ground truth. Among other benefits, this enables working with refraction of extreme magnitudes -- for instance produced by virtual metamaterials as shown in the above image (contrasted with a caustic produced by regular diamond in the leftmost panel). Many animated examples are also available in the project page.

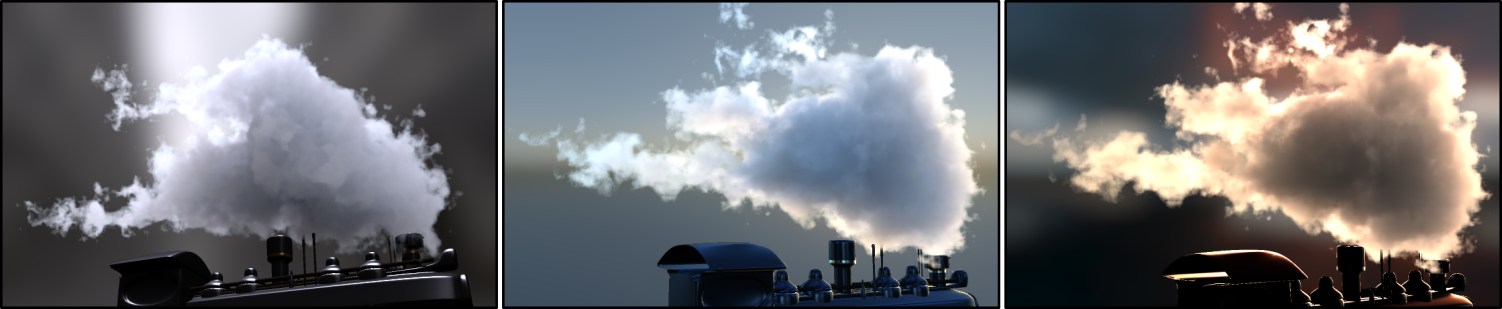

→ Principal-Ordinates Propagation for Real-Time Rendering of Participating Media

@ Elsevier Computers and Graphics 2014

Oskar Elek, Tobias Ritschel, Carsten Dachsbacher, Hans-Peter Seidel

|

![[html]](./meta/htm.png) Project page

Project page

![[pdf]](./meta/pdf.png) Article [↑]

Article [↑]

![[pdf]](./meta/pdf.png) Derivations

Derivations

![[avi]](./meta/zip.png) Video [↑]

Video [↑]

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

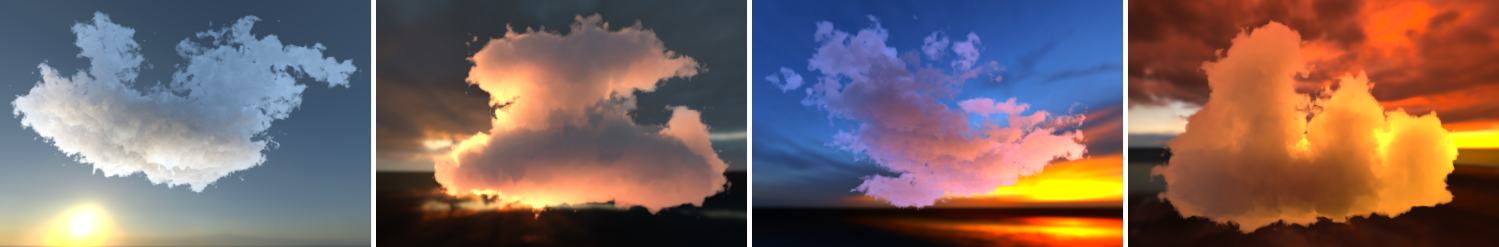

Efficient light transport simulation in participating media is challenging in general, but especially if the medium is heterogeneous and exhibits significant multiple anisotropic scattering. We present Principal-Ordinates Propagation, a novel finite-element method that achieves real-time rendering speeds on modern GPUs without imposing any significant restrictions on the rendered participated medium. We achieve this by dynamically decomposing all illumination into directional and point light sources, and propagating the light from these virtual sources in independent discrete propagation domains. These are individually aligned with approximate principal directions of light propagation from the respective light sources. Such decomposition allows us to use a very simple and computationally efficient unimodal basis for representing the propagated radiance, instead of using a general basis such as spherical harmonics. The resulting approach is biased but physically plausible, and largely reduces the rendering artifacts inherent to existing finite-element methods. At the same time it allows for virtually arbitrary scattering anisotropy, albedo, and other properties of the simulated medium, without requiring any precomputation.

One of the long-lasting challenges in rendering has been to efficiently simulate optically dense media with significent anisotropic scattering. These media (comprising clouds, smoke, vapor, various liquids, etc.) have been notoriously difficult to handle even for Monte-Carlo and other offline methods. Most approaches therefore apply the similarity theory and treat the media as isotropically scattering, which then leads to lack of directionally dependent features that often define the appearance of these media (such as silver lining in clouds).

Here we propose a novel way to handle anisotropic scattering in media. The core idea of "principal-ordinates propagation" is to decompose the incoming illumination into a discrete set of salient directions (similar to instant radiosity methods) and propagate the light energy along these directions (ordinates) separately. This key step enables an efficient way to represent both the intensity and directional distribution of the propagated radiance using the unimodal Henyey-Greenstein distribution (similar to a spherical Gaussian). Using different propagation grid geometries, we can compute volumetric transport from directional and point sources, and use this to propagate environment illumination, local recflections, and even camera importance to achieve a real-time reproduction of difficult anisotropic effects -- see the accompanying video.

→ Interactive Light Scattering with Principal-Ordinate Propagation

Best Student Paper @ Graphics Interface 2014

Oskar Elek, Tobias Ritschel, Carsten Dachsbacher, Hans-Peter Seidel

|

![[pdf]](./meta/pdf.png) Paper

Paper

![[pdf]](./meta/pdf.png) Conference slides

Conference slides

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry

Earlier version of the above work, published at the Graphics Interface conference, where it received the Michael A. J. Sweeney Award for "Best Student Paper". Compared to the above Computers & Graphics article, this version lacks the isotropic residual propagation phase, which is however mainly a performance improvement. The term "principal-ordinates propagation" has been coined in this paper already.

→ Real-Time Screen-Space Scattering in Homogeneous Environments

@ IEEE Computer Graphics and Applications 2013

Oskar Elek, Tobias Ritschel, Hans-Peter Seidel

|

|

|

|

This work presents an approximate algorithm for computing light scattering within homogeneous participating environments in screen space. Instead of simulating the full global illumination in participating media we model the scattering process by a physically-based point spread function. To do this efficiently we apply the point spread function by performing a discrete hierarchical convolution in a texture MIP map. We solve the main problem of this approach, illumination leaking, by designing a custom anisotropic incremental filter. Our solution is fully parallel, runs in hundreds of frames-per-second for usual screen resolutions and is directly applicable in most existing 2D or 3D rendering architectures.

In this project we tried to approximate light scattering in homogeneous media (most notably water and fog) by an image-space post-processing algorithm (requiring just a depth buffer as an additional input). This is possible because from the user's perspective, the high-level behavior of scattering is similar to blurring (see our 2017 fabrication project). The result is a very fast post-processing procedure that takes only a couple of milliseconds for HD images and generates results comparable to path tracing in the intended conditions. As such it can be seamlessly integrated into game engines as a better substitute for the standard exponential fog.

→ Interactive Cloud Rendering Using Temporally-Coherent Photon Mapping

@ Graphics Interface 2012

Oskar Elek, Tobias Ritschel, Alexander Wilkie, Hans-Peter Seidel

|

|

|

|

![[pdf]](./meta/pdf.png) Paper

Paper

![[pdf]](./meta/pdf.png) Conference slides

Conference slides

![[pdf]](./meta/zip.png) Video

Video

![[bib]](./meta/bib.png) BibTeX entry

BibTeX entry